Reliable automation is not about writing more tests ‒ it’s about engineering a system your teams can trust.

Test automation promises speed, confidence, and continuous feedback. Yet, in many organizations, it slowly becomes a liability: pipelines fail randomly, engineers spend hours investigating false alarms, and releases are delayed for reasons unrelated to product quality.

This happens when automation is treated as a collection of scripts instead of a real engineering product. Scripts execute. Platforms scale.

Modern QA is evolving from “test execution” to quality engineering. That shift requires architecture, observability, data control, and business alignment.

In this article, we explore how to move from fragile automation to stable, scalable, and trusted test platforms that actively support delivery instead of blocking it.

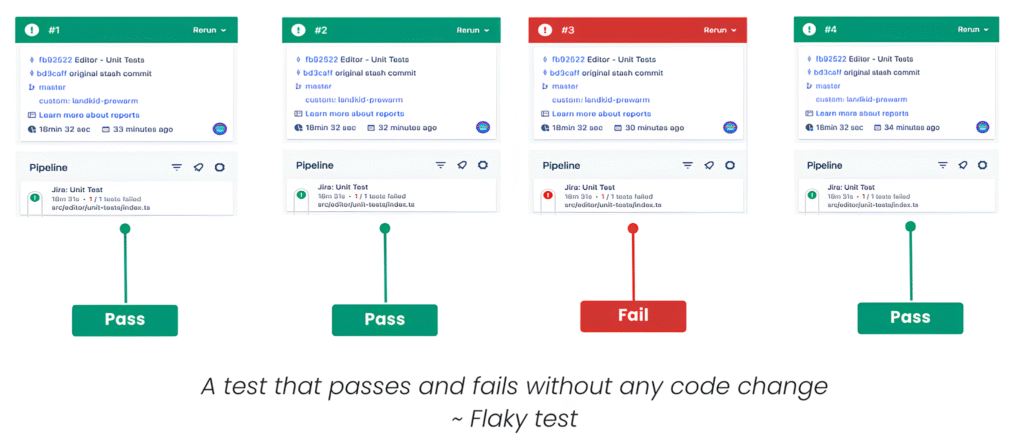

What Flaky Tests Really Cost

A flaky test is one that behaves inconsistently without changes in the application. It passes locally, fails in CI, passes after a rerun, and fails again tomorrow.

The visible cost is time.

The hidden cost is trust.

Flakiness leads to:

- Engineers ignoring red pipelines.

- QA wasting cycles on false defects.

- Slower feedback loops.

- Reduced confidence in releases.

Once teams stop believing in automation, manual verification returns, and delivery speed collapses.

Automation must be a signal, not noise.

Root Causes of Instability

1. Time-Based Waiting Instead of State-Based Waiting

Many suites still rely on fixed delays:

Thread.sleep(5000);This assumes infrastructure, network, and browsers behave consistently — which they never do.

Stable automation reacts to state, not to time:

- Element visibility.

- API response readiness.

- DOM stability.

- Business completion events.

Instead of sleeping, automation should observe reality:

$("#confirm").shouldBe(enabled).click();Waiting for conditions makes automation resilient to load, latency, and execution variability.

2. Weak Object Identification Strategy

Selectors are contracts between UI and automation.

When automation depends on DOM structure, it breaks with every refactor. When it depends on meaning, it survives evolution.

Bad approach:

/html/body/div[3]/div[2]/spanStrong approach:

data-testid- stable ids

- semantic attributes

$("[data-testid='confirm-payment']")Object identification should be discussed with developers, not discovered by testers. Stability starts in design, not in XPath tricks.

3. Hidden Dependencies Between Tests

Many failures are caused by invisible coupling:

- Shared users.

- Shared sessions.

- Ordered execution.

- Persistent state between tests.

Each test must behave like a microservice:

- It creates its own context.

- It controls its own data.

- It cleans after itself.

- It runs independently and in parallel.

Isolation transforms automation from fragile to industrial-grade.

4. Environment and Infrastructure Drift

CI environments are slower, noisier, and distributed. Automation must adapt.

Stability requires:

- Externalized configuration.

- Environment abstraction.

- Feature flag awareness.

- Controlled test data management.

- Strong logging and reporting.

Automation is no longer local execution — it is a distributed system client.

Automation Is Architecture, Not Scripts

Mature automation is designed, not hacked.

A scalable automation platform includes:

- Page Object Model (POM).

- Domain-driven steps.

- Behavior-driven readability.

- Reusable service layers.

- Centralized assertions.

- Clear separation of concerns.

Instead of technical actions:

click();

type();

submit();You express business intent:

When the customer submits a signed document

Then the signature process is completed successfully Tests become living documentation of the product.

Data Management: The Hidden Stability Layer

Most flaky behavior comes from unmanaged data, not UI.

Strong automation includes:

- Dynamic data generation.

- API-based setup.

- Idempotent scenarios.

- Controlled cleanup.

- Versioned datasets.

Tests should never fight for the same records.

Your data strategy is as important as your selector strategy.

Smart Retries and Self-Healing

Retries should expose problems, not hide them.

A mature approach:

- Detect flaky patterns.

- Tag unstable tests.

- Collect retry reasons.

- Fix root causes.

- Limit retry scope.

Some frameworks add self-healing locators, but engineering discipline always comes first. A flaky test hidden by retries becomes technical debt with interest.

Observability: Treat Automation Like Production

If production systems need monitoring, automation does too.

Add:

- Structured logs.

- Screenshots and videos.

- Failure classification.

- Metrics export.

- Trend dashboards.

Track:

- Flakiness rate.

- Execution duration trends.

- Failure root causes.

- Maintenance effort.

Automation must be observable, predictable, and continuously improvable.

Scaling Automation Across Teams

At scale, automation is cultural.

You need:

- Contribution standards.

- Code review rules.

- Shared ownership.

- Onboarding playbooks.

- Documentation hubs.

Automation stops being “QA code” and becomes a company asset shared by QA, Dev, and DevOps.

Business Impact of Reliable Automation

When automation is reliable, organizations gain:

- Faster release cycles.

- Lower defect leakage.

- Reduced manual testing cost.

- Better developer experience.

- Continuous confidence in delivery.

Reliability transforms automation from cost center into growth enabler.

Automation success is not defined by frameworks.

It is defined by engineering discipline.

Build:

✔ isolation

✔ observability

✔ architecture

✔ data strategy

✔ business alignment

And your automation will scale with your product instead of breaking under it.

Reliable automation is the foundation of modern QA engineering.